You Are Automating the Wrong Things: Let the Robots Do This, but Write That Yourself

Automation Gold: Welcome sequences, lead scoring, and routing that run on autopilot

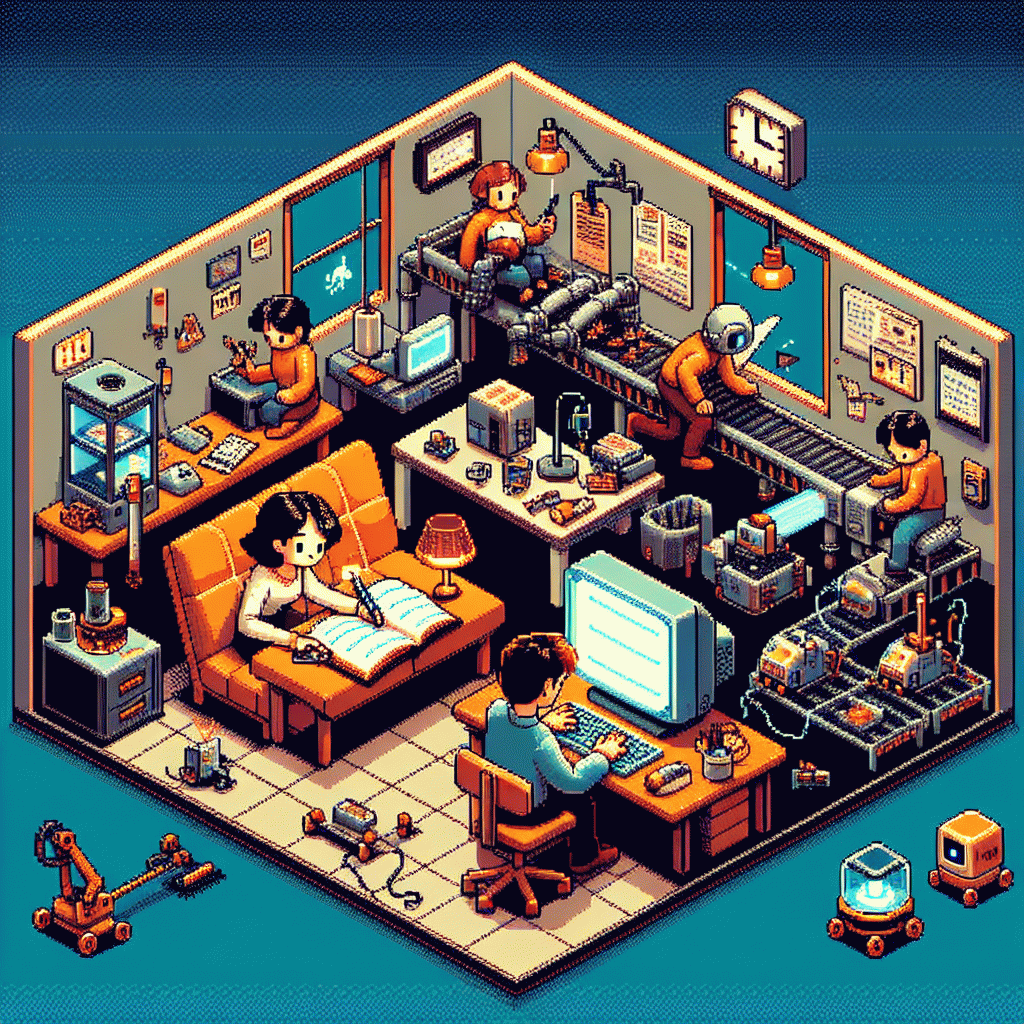

Think of automation as the backstage crew, not the lead actor. Use it to deliver a clean, predictable welcome: immediate acknowledgement, a quick value hit, a single clear next step, and a timed follow up that feels handcrafted. Automate the cadence, personalization tokens, and conditional branches so every new contact sees relevant content in the right order, but let people write the first message and the human follow up that builds trust.

Make lead scoring an evidence driven engine, not a magic number. Combine explicit signals like demo requests and pricing page visits with implicit behavior such as repeat content consumption and email clicks. Weight recency higher, apply decay to stale activity, and keep a few strong boosters for high intent actions. For example, assign higher points for a booked meeting or a reply, moderate points for content depth, and subtract for long inactivity periods. Then calibrate thresholds against actual conversions every month.

Routing should be a deterministic machine that knows when to wake a human. Route immediately for hot leads above threshold, route by geography or product interest, and batch medium leads into a timed sequence for SDR outreach. Tie routing to SLAs so sales knows expectations: instant ping for score above X, 24 hour follow up for Y, and a handoff checklist that includes context, recent activity, and suggested next steps. Automation assigns, tags, and surfaces insights; humans close.

Put guardrails around the robots. Suppress duplicates, limit outreach frequency, require human review for high value touchpoints, and A/B test automation logic while keeping copy human written. Actionable next steps: map current journeys, pick three signals to score, set one routing threshold, and run a 30 day audit to adjust. Let the machines manage the choreography so creative humans can focus on the performance.

Human-Only Zone: Brand voice, thought leadership, and sensitive outreach you should handcraft

Machines can crank out captions and schedule posts, but the parts of your brand that need teeth, warmth, and a wink must be born from a human keyboard. Brand voice is less about vocabulary and more about intent: what you decide to care about, whom you cheer for, and how you apologize when you mess up. Those are judgement calls, tonal gymnastics, and personality — not checkboxes.

Thought leadership thrives on original observation, risk, and context. A short essay that reframes an industry trend needs a mind that has lived in the trenches or asked the awkward questions — not an LLM regurgitating stats. Use humans to synthesize contradictory signals into a novel take, to cite the right people, and to challenge your own assumptions before publishing. Readers smell boilerplate; they reward brave, specific thinking.

Sensitive outreach is a minefield where a small miswording can escalate. Customer apologies, recruitment rejections, investor updates, influencer negotiations — handle these by people who can read tone, history, and implied meaning. Scripts can map structure but must be delivered with human judgment: when to bend policy, when to follow it, and when to pick up the phone. That nuance is what protects reputation and relationships.

Practical process: automate the scaffolding but handcraft the heart. Draft templates for greetings, data pulls, and ops updates, then mark checkpoints requiring human rewrite and signoff. Create a quick rubric: impact level, audience closeness, and legal sensitivity; anything above threshold goes to a named owner for polish. Reward editors who catch tone slips and build review loops that are fast, not slow — humans plus tools, not robots replacing humans.

The Hybrid Sweet Spot: Let AI draft; you punch up headlines, hooks, and CTAs

Think of AI as your industrious intern who loves to draft but has zero taste. Let it generate the drafts, outlines, and variants so you can spend time on the three persuasion hot spots: headlines, opening hooks, and CTAs. Robots can crank content; humans decide whether it sells, surprises, and feels human.

Playbook: prompt AI for a clean draft and five alternate headlines, five opening hooks, and five CTAs. Scan the options, pick the best directions, then rewrite each chosen headline in your brand voice—shorten, inject emotion, and add a twist. Save the boring AI lines as backups for A/B tests.

Punch-up formulas: Headlines — "How to [benefit] without [objection]", "X things that make [result] faster". Hooks — open with a surprising stat, a micro-story, or a bold question like "What if you could...?" CTAs — use benefit + immediacy: "Start saving time today", "Claim your seat—limited spots". Keep rhythm snappy and verbs urgent.

Micro-rules: edit to be 10–30% shorter than the AI draft, favor one bold idea per headline, and never let a CTA be polite—ask for action. The sweet spot is simple: automate the heavy lifting, humanize the persuasion. Let the robots do the drafting; you deliver the charm.

Triggers That Convert (Not Creep): Behavior-based journeys done right

Think of behavior-based triggers as a polite robotic concierge: they watch signals, knock at the right moment, and hand you an opportunity to converse — but they should not improvise your voice. Automate the when and who (segmentation, timing, suppression), and keep the what — the message that persuades — written by humans who know tone, context, and nuance.

Good triggers are tiny, respectful, and useful: cart nudges after a 3-minute exit, product page retargets when someone lingers 30+ seconds, and milestone notes after a first purchase. Set clear guardrails — frequency caps, opt-down paths, and cool-off windows — so automation converts without creeping people out.

Start small: map three micro-behaviors, pick one trigger, and craft short, empathetic copy for each. Templates are helpful, but personalize tokens where they matter (recent product, city, or user name). If you need volume plays for social proof, try get free instagram followers, likes and views as a turbo boost — then test relevance.

Measure intent, not just opens: track downstream actions and lift in conversion. Keep a human sign-off or offer a real reply channel — automation should start the conversation, not be the only voice. In practice: iterate subject lines, trim friction, and celebrate the micro-wins that prove your triggers work.

Proof It Works: Metrics, A/B tests, and guardrails to keep quality high

Start from what matters: outcomes not impressions. Pick two to three metrics that map to real business value — conversion lift, read-through rate, and a quality failure rate that flags hallucinations or tone drift. Track time saved per copy cycle and the percentage of pieces that pass a human quality check. Use those numbers to judge where automation should be applied and where a human voice must remain in the loop.

Run A/B tests like a scientist, not like someone flipping a coin. Build experiments that compare human-written control copy to AI-drafted variants, but keep the tests focused: one variable per experiment, a clear hypothesis, and a predeclared success threshold. Aim for practical sample sizes and run duration rather than waiting for mythical statistical purity; if performance gaps are tiny but persistent, that is actionable information about where to keep people writing.

Install guardrails that stop bad content before it ships. Implement confidence thresholds, toxicity and factuality checks, and semantic similarity scans against a golden set of approved copy. Randomly sample outputs for human review and route edge cases for escalation. Create automatic rollback triggers when quality metrics drop below acceptable bounds so you can scale safely without turning the brand voice into a bad idea factory.

Make the process operational: a lightweight dashboard that tracks your core metrics, a cadence for experiments, and clear stop rules for failed tests. Feed qualitative reviewer notes back into model prompts and templates so the system learns what to avoid and what to amplify. In short, let the robots chase scale and repetitive polish, but measure everything and keep the final sentence in human hands. Start with one metric and one A/B test this week and iterate from there.