The 3x3 Creative Testing Hack That Slashes Costs and Beats Deadlines

Meet 3x3: nine quick shots to find winners fast

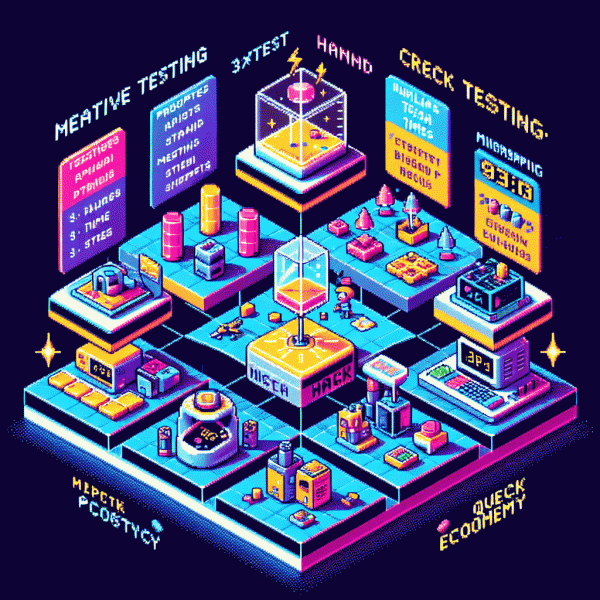

Imagine a contact sheet of creative ideas where you do not have to pick a favorite by gut. Instead, you test nine small bets that tell you what actually works. The grid forces economy: three distinct creative directions across three lightweight variants each. Run them in parallel, learn in days, then stop guessing. This is about speed, clarity, and low waste—design experiments that reveal patterns rather than validate preferences.

Start by choosing three creative treatments that are meaningfully different: a bold visual, a short demo, and a social proof clip. For each treatment, vary one dimension across three options — for example three headlines, three hooks, or three audiences. Keep creative changes minimal so you are testing clean hypotheses. Allocate a micro budget to each of the nine cells, schedule a fixed short window, and keep landing experiences identical so signal stays pure.

Decide rules up front and stick to them. Pick the primary metric that maps to your objective — CTR for awareness, CPC and conversion rate for direct response. Set kill thresholds that prioritize speed: if a cell is under half the median after the test window, pause it. Promote any top cell into a scaling test with 3x budget while running a fresh 3x3 to iterate. Capture the winning element combos as reusable rules for future grids so every run compounds learning.

- 🚀 Visual: Test hero image styles to find what stops the scroll.

- 💥 Copy: Try three headline approaches to surface the clearest hook.

- 👍 Audience: Split by interest, lookalike, or placement to spot where creatives resonate best.

Set up in 15 minutes: audiences, angles, and formats

Set a 15‑minute timer and treat this like a sprint, not a thesis. Your goal is to leave the session with a usable test matrix — three audiences, three creative angles, three formats — that you can fire at once. Ignore perfection: prioritize clarity, variety, and measurability so every variant teaches you something.

Audience: choose one high-intent segment, one broad/lookalike, and one niche interest. Pull existing data for fast wins — top converters, cart abandoners, and an interest layer that mirrors your best customer. Give each audience a crisp targeting rule (age, behavior, interest) and move on; you can refine after the first learning window.

Angle: craft three tight messaging directions — a benefit-led promise, a social-proof / credibility piece, and a curiosity or problem-driven hook. Write two short headlines and one 90‑character description for each angle so you can swap copy quickly. Keep language active and outcome-focused; customers buy change, not features.

Format: pick three formats that map to production ease and platform performance — a single image (fast), a 6–15s vertical video (high-impact), and a swipeable carousel or slideshow (detail). Use the same visual assets across formats to isolate angle performance and speed up edits.

Now combine them into a simple grid and assign tiny budgets: equal spend to each row for 3–5 days or 50–100 clicks total. Track one primary KPI (CPA, ROAS, or CPL) and one secondary signal (CTR or engagement). If a combo underperforms, kill it; if it overperforms, scale incrementally and iterate the losing dimension.

This is not a creative retreat — it’s a learning machine. In 15 minutes you produce a repeatable test blueprint that slashes waste, shortens feedback loops, and hands your team clear winners to scale. Ready, set, test.

Run the grid: how to test, tag, and track without chaos

Think of the grid as a creative lab bench: nine tightly scoped experiments, each with one variable and one hypothesis. Label cells like ig_springA_01, include the audience and creative type in the tag, and set a single KPI per cell. This makes results readable at a glance and keeps the team from spinning up duplicate tests.

Run small bets fast: allocate 60% of test budget to exploration, 30% to validation, and 10% to wildcards. Use a simple tagging convention that combines channel, campaign, and cell id, for example instagram_springA_01. Capture start date, creative asset id, and bid strategy in a spreadsheet column so you can filter winners in seconds.

Standardize outcomes so scaling is mechanical not emotional. Use a three tier result rubric for quick decisions:

- 🆓 Free: control creative used for baseline comparison and sanity checks

- 🐢 Slow: underperformers that need creative tweaks or audience shifts

- 🚀 Fast: clear winners to scale immediately with doubled spend

Automate tracking into one dashboard and flag winners with color codes. If you want early social proof to speed signal testing, consider get instagram likes instantly to kickstart low volume social proof, then validate with real conversion metrics. Run the grid like a scientist and the chaos turns into a predictable engine for faster, cheaper wins.

Kill or scale: make data-driven calls with confidence

Think of your 3x3 grid as a tiny laboratory where speed and clarity win. Treat each creative+audience pairing as an independent test cell: record cost per conversion, conversion rate and engagement signals, then let the metrics do the shouting. Fast, repeatable evidence is the difference between a lucky guess and a smart bet.

Choose one primary metric aligned to the goal and ignore vanity signal clutter. For lead generation pick CPA and CVR. For direct sales monitor ROAS and cost per purchase. For awareness use CTR and view rate. Always keep one control creative so you can measure relative lift instead of chasing noise.

Use clear, binary rules to kill or scale. If a cell sits in the bottom third for your primary metric and is >25 percent worse than the median after a minimum sample of 50 conversions or 3,000 impressions and seven days, kill it. If a combination shows a >20 percent lift and stable CPA advantage, scale it.

Operationalize this into a weekly cadence: run the 9-cell test, rank outcomes, move budget incrementally to winners (double then double again if performance holds), retire losers, and swap one new creative into the grid each week. Track decisions in a simple spreadsheet so your team can learn faster than the market moves.

Real world results: the budget math that pays for itself

Run the 3x3 matrix once and the budget math becomes almost boringly generous. Produce assets as modular templates instead of nine bespoke pieces and you can cut creative production to about $300 instead of roughly $1,800. Then allocate a small, fixed test spend—say $125 per variant for a fast validity check—and the full test tab comes to $1,425. That is your rapid experiment cost, paid in days not months.

Now the payoff. If your baseline CPA is $25 on 400 monthly conversions you are spending $10,000. A winning creative that drops CPA by 25 percent saves you $2,500 a month. Even a conservative lift pays back the $1,425 test inside a single campaign cycle, turning testing from expense into investment.

The secret is early pruning. Run each cell for 48 to 72 hours, kill the bottom performers (usually the lower half), and reallocate spend to the top three. That simple cutoff often frees up 60 to 70 percent of your budget to amplify winners, shrinking waste and accelerating learning.

Actionable checklist: cap per-variant spend, enforce a 48–72 hour early-kill rule, measure CTR and CPA simultaneously, then double down on the top creative. Follow that flow and the 3x3 test does not merely justify its cost; it pays for the next experiment.