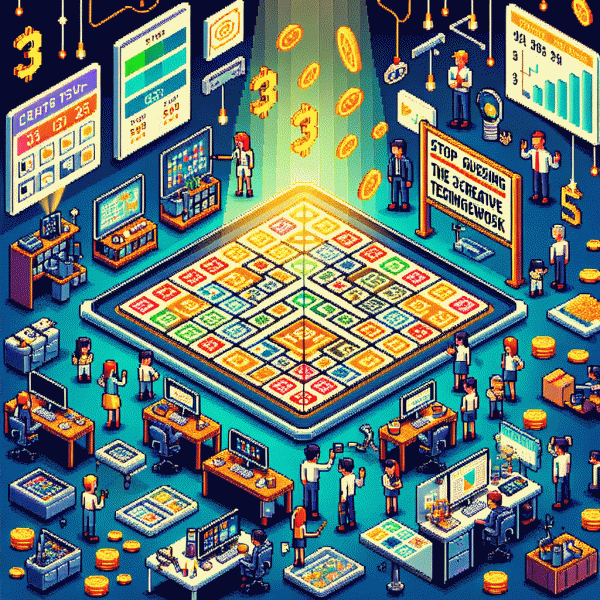

Stop Guessing: The 3x3 Creative Testing Framework That Slashes Ad Costs Fast

The 3x3, Explained: 3 Hooks x 3 Creatives = 9 Tests, Zero Fluff

Treat this like a marketing lab, not a guessing game. Choose three radically different reasons someone should stop scrolling, then craft three distinct executions for each reason. That 3x3 grid gives you nine clean comparisons that reveal what actually moves metrics — fast — so you stop tossing budget at opinions and start buying real signals.

Begin by locking in your three hooks. Make them obvious and contrasty: a pain-point callout that names the problem, a curiosity teaser that creates a knowledge gap, and a social-proof angle that shows people like them getting results. Think of a hook as the single 1–3 second moment that earns attention; if it does not land immediately, nothing that follows will save the ad.

Next, produce three creative treatments that test delivery rather than confusing variables. For example: a tight demo cut, a short emotional scene, and a UGC/testimonial take. Keep brand elements, headline text, and the landing page identical so the experiment isolates hook versus execution. Use a consistent file naming system so your 3x3 matrix reads instantly when results roll in.

Run all nine variants with equal spend and a short learning window (48–72 hours). Evaluate CTR, view rate, conversion rate and CPA; drop the bottom third, double down on the top performers, and iterate by swapping new hooks against the best creative. Rinse and repeat — small, controlled tests beat big guesses, and that is how you shave ad costs without the fluff.

Plug-and-Play Setup: Briefs, Naming, and Assets You Can Launch Today

Think of this as your ad-launch Swiss army knife: a tiny, repeatable kit that gets you from blank doc to live campaign fast. Begin with a one-paragraph brief that answers three things clearly—who we want, what action we want, and how we'll measure success—so every creative you build has a target and a scoreboard.

Use a micro-brief template you can copy/paste: Audience: small descriptor (age, interest, intent); Offer: one-sentence value prop and CTA; KPI: primary metric and acceptable CPA/CTR range. Keep it microscopic—don't write a novel. Short briefs force faster decisions, cleaner naming, and repeatable tests.

Adopt a strict naming convention so analytics don't become chaos. A compact pattern like platform_audience_creative_v# (e.g., facebook_moms25-34_v02) makes filtering trivial. Always increment the version for edits, and include objective or bid if you run multiple strategies (cpc/cpa), so you can slice performance without guessing what changed.

Ship a minimal asset pack: 3 visuals (static/video/thumb), 3 headlines, 1 description, and 3 audience segments—your 3x3 starter. Upload labeled files, duplicate the ad set, swap creatives, launch on a modest budget, and let the data breathe for 48–72 hours. That's all it takes to stop guessing and start scaling.

How to Run It on Instagram: Budgets, Pacing, and Fast Decision Rules

Treat your 3x3 grid like nine tiny experiments. Start each creative×audience cell with a tight daily budget — think $8–15 per cell for accounts with moderate traffic, $3–6 for micro-budgets — and run them on a 48–72 hour learning window. That provides Instagram enough signals to judge CTR and CPC without wasting cash. Keep bids on automatic to let the algorithm stabilize; manual bidding invites guesswork.

Pacing matters: front-load impressions but avoid burning frequency too fast. Use standard pacing unless you need rapid signal for a launch, then accelerate for 24–36 hours and revert. After the initial window, check reach, CPA, and CTR; mark any cell with CPA 30%+ above your target as "kill" and cut budget immediately. Log decisions so you can spot repeatable patterns across tests.

Fast decision rules are your friend. Require a minimum of 500–1,000 impressions or 10–20 results before calling a winner, then promote winners by doubling budget and expanding lookalike size in stages. Kill the bottom third, keep the middle third steady for retest, and scale the top third. Track creative-level CTR plus landing page conversion — great CTR with poor conversion signals a funnel issue, not a creative failure.

Want a plug-and-play boost to skip setup headaches? Use structured tools and templates to spin up 3x3 tests fast — cheaper than wasted guesswork and faster to insights. For a quick start, visit get instagram promotion to see ready-made packs and pacing presets that match these rules and shave days off your learning curve.

Read the Results: Kill Losers in 48 Hours, Push Winners with Confidence

After you launch a 3x3 creative grid, treat the first 48 hours like a medical triage for ads. Monitor CTR, CPC and conversions at the creative level rather than the campaign aggregate. Watch for clear signals: engagement falling, cost per acquisition spiking, or impressions climbing while outcomes stay flat. If a creative shows the same bad trend across two audience slices, it is not learning, it is leaking budget.

Apply simple kill rules to avoid analysis paralysis. For example, pause any creative with CTR below 50 percent of the test median or CPA above 2x target after 48 hours and at least 20 events. If volume is too low for that threshold, extend to a fourth day or reallocate that budget to higher-traffic variants. The goal is decisive moves, not wishful tinkering.

When a creative wins, scale with confidence but without breaking the machine. Increase spend in controlled steps — 20 to 30 percent every 48 hours — and duplicate the winning ad into fresh ad sets to protect relevance scores. Broaden audiences gradually, rotate one variable at a time, and keep a small pool of new spins so creative fatigue never sneaks up.

End each sprint with a short log: which creatives were paused, which were promoted, and what hypothesis to test next. Automate basic rules so you and your team focus on strategy. Fast kills and steady scaling turn testing into a predictable engine that slashes costs and frees you to be creatively bold.

Scale Without Waste: Iterate Winners into New Combos and Cut CPA

Turn that winning creative into a factory, not a one-hit wonder. When a variant proves it can convert, do not blast budgets across every audience and pray. Break the asset into swap-able parts — headline, visual, offer — then recombine those parts into lean batches so you get more winners without amplifying losers.

Start by harvesting the highest-ROI pieces from recent winners: top-performing copy lines, the image crop that beat the rest, and the CTA that nudged action. Run tight, mirrored experiments that only change a single variable at a time to ensure signal over noise. Keep batch sizes small and budgets focused so each combo gets meaningful data quickly.

Build a simple production cadence and classify experiments into clear lanes:

- 🚀 Fast: quick A/B of two CTAs with the same creative to seize momentum.

- ⚙️ Scale: recombine 3 proven headlines with 4 proven visuals to find multiplicative winners.

- 💥 Optimize: swap micro-elements like button color or caption length after a winner emerges.

If you want an assist in speeding this up, check buy facebook boosting service to accelerate reach for the strongest combos while you double down. Treat the process like product iteration: document every change, freeze winners into control groups, and prune anything that drives CPA up. Do this consistently and your spend becomes a targeted engine that scales winners, not a spray-and-hope budget drain.