Steal This 3x3 Creative Testing Framework: Find Winners Fast and Save Money

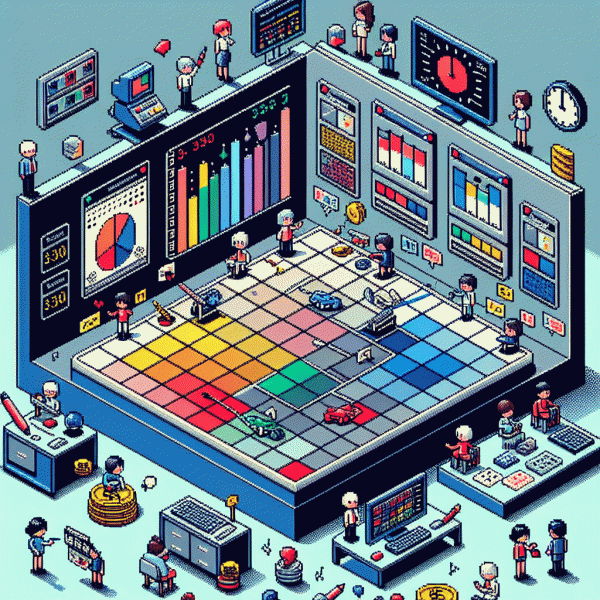

The 3x3 explained: the tiny grid that crushes guesswork

Think of this as a tiny creative lab: three levers, three variants each, nine fast experiments. The magic is that you get real comparative data without a giant media budget. Combinations reveal patterns — a headline that sings with one image, a CTA that tanks across the board — so you stop guessing and start iterating. This grid turns creative chaos into a reproducible playbook.

Start by choosing three core elements to vary: for example the visual (image or short video), the primary headline, and the call to action. Produce exactly three distinct versions of each: a safe option, a bold option, and one experimental idea. Keep everything else constant: same landing page, same audience slice, same placement. That isolation is the secret sauce for clean, comparable results.

Launch all nine combinations at once and split your test budget evenly. Run a tight window — aim for 3 to 7 days or until your predefined thresholds are met — and focus on the metrics that matter: CTR, conversion rate, and CPA. Do not be seduced by vanity metrics. The design multiplies learning: one strong visual paired with multiple headlines will tell you where to invest attention next.

When a winner appears, scale it and iterate: swap out one losing variant for a fresh idea and spin another 3x3. Put simple stop rules in place to kill underperformers and free budget for winners. This method is fast, cheap, and delightfully immune to opinion-based decisions. Run one 3x3 this week and watch wasted spend shrink while clarity and conversion rise.

Build your grid: 3 hooks, 3 visuals, 3 CTAs in 30 minutes

Set a 30 minute timer and treat this like a creative sprint. Open a blank doc or sticky-note board and sketch a 3x3 grid: three hooks down the left, three visual treatments across the top, and three CTAs to rotate through. Keep every idea to one short line so you can mix and match without overthinking; speed beats polish in early tests.

Start with three distinct hooks that force a different emotional response. Problem: call out a pain point in one sentence. Curiosity: tease a surprising insight or stat. Social proof: lead with proof or a quick customer line. Write each hook as a headline so it can be dropped directly into captions or the opening frame of a video.

For visuals pick three fast-to-shoot treatments. Close-up: product or feature detail with tight framing and crisp light. Context: person using the product in a realistic setting to show benefit. Behind-the-scenes: process, setup, or team moment that humanizes the brand. Use your phone, natural window light, and one simple prop per shot to keep production under ten minutes per visual.

Close the grid with three CTAs that map to intent and friction. Buy/Shop: for ready-to-purchase viewers. Learn/Watch: for curious skimmers who need more info. Sign up/Claim: for lead capture or limited offers. Now create nine combos, launch each with a small spend or organic push for 24 to 72 hours, and pick the top performer by cost per meaningful action. Repeat the sprint and double down on winners.

Launch smarter: budgets, cadence, and clean data from day one

Think of the launch as a science experiment with a party budget: smart, staged, and impossible to waste. Split your initial spend across three creative packs and three micro-audiences so each creative has room to breathe. Start small with a daily base of $15–30 per pack — enough to hit the platform's learning threshold without burning cash on losers.

Make cadence your secret weapon: run a true minimum test window of 72 hours to get statistically useful signals, then give top performers a second stage of seven days to confirm consistency. Kill creative that underperforms by more than 30% versus the median after that first window; duplicates or near-identical assets? Pause them to avoid internal competition.

Scale like a scalpel, not a sledgehammer. When a creative passes stage two, bump its budget by 2–3x in one step and monitor CPA for 48 hours before another increase. Keep audience sizes tight for tests (lookalikes 1–3%, interest clusters under 500k) so signals aren't diluted — then broaden only after repeatable wins.

Data hygiene wins more campaigns than fancy creative. Use strict naming conventions, tag every ad with UTMs, exclude converters from test audiences, and consolidate conversion windows across campaigns. If your reporting looks messy at launch, it's going to cost you twice: wasted spend now and bad decisions later.

Read the signals: fast metrics and simple kill rules

Think of the first 24 to 48 hours as a smoke test. In a 3x3 setup where three creatives run across three audiences, you do not wait for long conversion windows to pronounce a winner. Watch the fast metrics that actually move early budget decisions: CTR and link CTR for attention, view rate and average watch time for video hooks, CPC and CPM for efficiency, plus social signals like saves and shares for resonance. These are the signals that tell you whether a creative has the muscle to scale.

Turn those signals into blunt, binary kill rules so decision making does not become a democracy of opinions. For example, after 1,000 impressions or 48 hours kill any creative with CTR below 0.4 percent or view rate under 15 percent, or any placement where CPC is more than 2x your target. For audiences, drop any audience whose cost per link click is 3x worse than the cohort median. Also use relative rules: automatically cut the bottom third of creative-audience pairs and promote the top 20 percent for a follow up ramp.

When reading signals focus on direction and consistency, not tiny fluctuations. A creative that trends up modestly is more promising than one that spikes then collapses. Monitor frequency and engagement quality because high click volume with zero post click time is false positive fuel. Always cross reference creative performance by audience to catch mismatches; a format that wins with an age segment might flounder with another, and that insight is pure gold for iteration.

Operationalize these rules so you can act fast and avoid analysis paralysis. Codify 3 to 4 stop and scale rules, wire them into automated rules or a simple sheet and script, and commit to a 48 hour review rhythm. Be ruthless about killing losers and generous about funding marginal winners for a second test. The result is faster learning, fewer wasted dollars, and more budget freed to back the creative that actually earns attention.

Scale the winners: iterate, clone, and watch CPA drop

When a creative starts converting, treat it like a pet experiment: nurture first, then scale. Duplicate the winning ad or ad set and increase budget in 20–30% stair steps every 48 hours while watching CPA. If CPA drops or stays flat, keep scaling; if it creeps up, revert to the last stable step. Small, measured bumps beat reckless blasting.

Clone the hero creative across audiences and placements, changing only one variable per clone — headline, CTA, thumbnail, landing page, or targeting — so you know what moved the needle. Try lookalikes at 1% and 2%, swap from CBO to manual bids if needed, and turn a 15‑second hero into a 6‑second punchline for Reels or Stories to battle attention decay.

As you expand, prime momentum with social proof and early engagement boosts. A quick tactic is to accelerate initial interactions using services that kickstart visibility, then let organic conversions carry the rest; for example, consider get instant real instagram likes as a short-term velocity play while you monitor efficiency and conversion rate.

Operationalize the wins: set automated rules to pause clones when CPA is 20% worse than baseline, funnel budget to top audiences, refresh creatives every 7–14 days, and log hypotheses with outcomes. Scale smart, iterate fast, and you will see CPA trend in the friendlier direction.