Marketers Hate This Hack: Let the Robots Run Your Ads and Get Your Time Back

Bye-Bye Busywork: What AI Actually Handles vs What Still Needs You

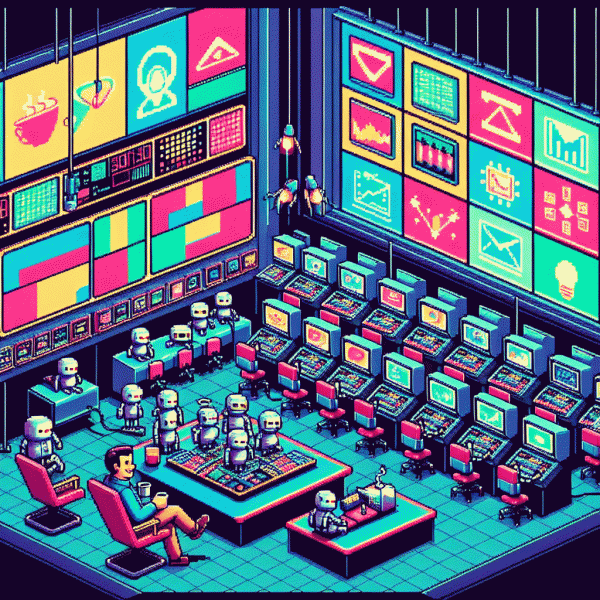

Think of AI as your tireless junior ad-ops: it eats rules and spits out scale. In practice that means automated bidding, budget pacing, dynamic creative swaps, multilayer A/B testing, dayparting, lookalike expansion, negative-audience pruning and instant performance reports. It's the engine that runs hundreds of micro-experiments so you can stop babysitting manual tweaks and actually do strategy work.

That doesn't mean handing over the map. Humans still steer brand voice, product positioning, crisis response, partner negotiations, privacy decisions and long-term funnel design. You define exclusions, creative tone, and the ethical boundaries—then let the machine operate inside them. Bold, counterintuitive ideas still need human intuition to launch.

Practical handoff: define KPIs and hard guardrails, set experiment lengths and creative-refresh cadence, enable automated scaling and alerts, and schedule regular reviews. For a quick starter that demonstrates automated reach tactics in minutes try best instagram boosting service to see how autonomy performs against your rules before full rollout.

Operational cadence: glance daily for alerts, triage creatives twice a week, run performance reviews weekly and take a monthly deep-dive for strategy and cohort analysis. When you trade busywork for decision-making time, you'll find more room for storytelling, partnerships and the kind of creative risk machines can't take.

Autopilot Targeting: Smarter Audiences Without the Guessing Game

Imagine handing audience hunting to a tireless data hound that never second guesses itself. Autopilot targeting uses behavioral signals, micro conversions, and cross‑device footprints to stitch together audiences that actually convert. Instead of tweaking demographics by gut feeling, let algorithms surface pockets of intent you would not find with a spreadsheet and an espresso.

Start smart: feed the system three to five high quality conversion events and a small, broad seed audience. Use lookalike expansion and give the model an exploration window of 48 to 72 hours without manual interference. Keep creatives varied so the machine can match messaging to emergent segments rather than forcing one-size-fits-all ads.

Protect performance with guardrails. Set conservative CPA targets, enable automated rules to pause underperformers, and create budget reallocation triggers for the top performing cohorts. Monitor cohorts, not impressions: track micro conversions and initial LTV signals so the algorithm optimizes toward meaningful business outcomes instead of vanity metrics.

The result is less busywork and more strategic time back in your calendar. Treat autopilot targeting like a collaborator that experiments at scale, surfaces winners, and hands you a shortlist of audiences worth doubling down on. Try a controlled test and watch the machine do the tedious part so you can focus on creative and growth.

Creative on Tap: Prompts, Variations, and A/B Tests in Minutes

Think like a chef and let the robot be your sous: feed a short, focused brief and watch dozens of on brand concepts pour out. Start with a crisp objective, a target persona, and one measurable KPI (clicks, leads, sales). That minimal prompt lets the model produce headlines, captions, image suggestions, and microcopy in minutes so you can move from blank page to launch without the usual creative bottleneck.

Use a reliable prompt skeleton to get consistent output. For example: Product: describe it in one line. Audience: two defining traits. Benefit: main advantage. Tone: choose witty, urgent, or sincere. Format: request five short headlines, three caption lengths, and two CTA variants. Submit that once and the system returns a tidy batch you can iterate on, saving hours of back and forth.

Turn those batches into combinatorial variants automatically. Swap five headlines with three CTAs and four image concepts to generate 60 creative pairs in one pass, then tag each file with a simple naming scheme for tracking. Export a CSV of creative ids, copy snippets, and asset links so ad platforms can ingest tests without manual copy paste. That scale is what makes rapid learning possible.

Finally, automate A/B logic: deploy wide, collect 48 to 72 hours of performance, then let the algorithm shift budget toward winners and pause losers. Monitor one primary metric, set a minimum sample size, and schedule daily checks. The result: faster optimizations, clearer signals, and a lot more time on your calendar for the work that actually needs a human touch.

Guardrails On: Budgets, Brand Safety, and When to Hit Pause

Let the machines run the day to day, but do not hand them the keys without a padlock. Start by codifying budget rules that feel human. Set hard daily caps, a safety lifetime budget, and a minimum conversion threshold so the algorithm cannot burn cash chasing vanity metrics. Think of it as delegating chores, not bankroll roulette.

Brand safety is not optional when autonomy scales. Maintain strict blocklists, enforce placement exclusions, and preapprove creative clusters before they go live. Use contextual signals and negative keywords to keep ads away from risky content, and mirror that with image and sentiment checks to stop tone deaf moments before they air.

Know when to hit pause by wiring simple, discrete triggers. Examples: cost per acquisition rising 40 percent week over week, CTR collapsing to half its baseline, or frequency climbing into user complaint territory. Automate alerts so a human only needs to intervene when thresholds trip; automate the evaluation, not the final judgment.

Operationalize guardrails with short experiment windows, rollback blueprints, and a human review cadence. Schedule weekly audits, stash creative backups, and keep a readable audit trail for every automated decision. Teach the algorithm your goals with clear KPIs and reward signals so it optimizes the right things.

Practical quick wins:

- 🆓 Budget: hard daily cap plus a cooling period if spend spikes beyond 30 percent.

- 🐢 Safety: enforced placement excludes and sentiment scans before scaling.

- 🚀 Pause: automated pause when CPA exceeds threshold or CTR drops by half.

Your 7-Day Launch Plan: KPIs, Quick Wins, and What to Tweak

Start by choosing two primary KPIs and one guardrail metric. For most performance ads those are CPA or ROAS plus CTR as a health check, and landing page conversion rate as a guardrail. Record baseline numbers, set a realistic learning budget, and decide your minimum sample size so the automation has something meaningful to optimize against.

Run a tight 7 day sprint: Day 1 launch with three creatives and three audience seeds; Day 2 kill the bottom performers by CTR; Day 3 let automated bidding hunt for cheaper conversions; Day 4 introduce a fresh creative variation; Day 5 test a minor audience expansion; Day 6 reassess CPA trends; Day 7 decide to scale, iterate, or pause and regroup.

- 🆓 Quick Win: swap the headline and thumbnail for the lowest CTR ad to see immediate lift.

- 🐢 Slow Win: expand lookalikes only after stable CPA for 48 hours to avoid costly drift.

- 🚀 Scale: increase budget on winners that beat target CPA by 20 percent and hold automated rules to cap risk.

Watch for tweak triggers: rising CPM with falling CTR signals creative fatigue; high frequency and audience overlap point to segmentation needs; sudden CPA spikes suggest bid or attribution shifts. Change only one variable at a time and give the algorithm 24 to 48 hours post tweak before judging impact.

Wrap the week with clear decision rules: scale winners with staged budget increases, refresh creatives that show fatigue, or reallocate budgets to other experiments. Let automation handle micro-optimizations while you focus on strategy and the next creative breakthrough.