Are Instagram Ads Still Worth It? The ROI Plot Twist You Didn't See Coming

Swipe-Stopping or Budget-Draining? How to Tell in 10 Minutes

Think of this as a 10 minute audit that turns guesswork into actions. Start with a short screen of your top performing ad: watch the first three seconds on mute, scan the caption, and open the ad report. The goal is simple — find one glaring win to scale and one glaring leak to stop. If both are missing, you are running an experiment, not a campaign.

CTR: quick signal — above 1% usually means creative and targeting are aligned; 0.2–1% means test new hooks; under 0.2% is a budget drain. View retention: for videos, 3s view rate above 50% is okay, 75% plus is excellent. CPA vs margin: if cost per action is more than 30% of average order value, pause and fix before scaling.

Next, a three step creative triage you can run in minutes: swap the first frame, shorten the caption to one clear line with a single CTA, and duplicate the ad with a different audience slice. Add a thumbnail with a clear face or product close up. Run these micro tests for 24–48 hours. If one variation pulls ahead fast, double down and reallocate budget from losers.

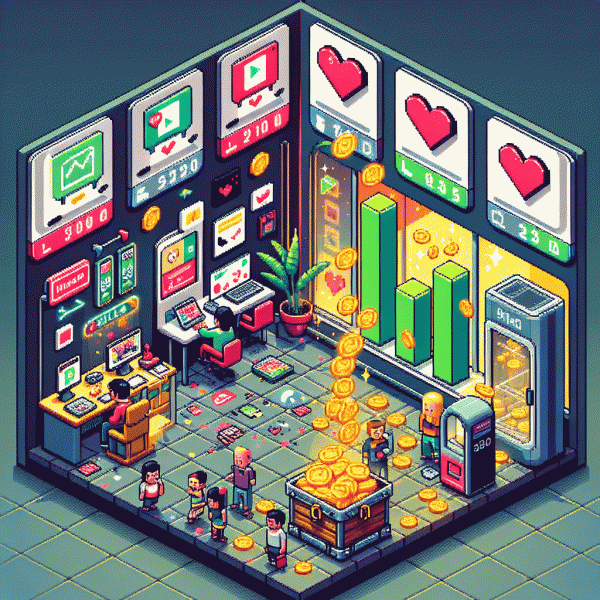

Decision rubric: if one ad hits good CTR and acceptable CPA, scale by 20–30% increments. If all ads fail the 10 minute checks, cut spend and rework creative or offer. Small tweaks often flip ROI faster than massive budget moves. Do this quick audit weekly and treat ads like a lab, not a vending machine — you will find the plot twist.

The 3 Metrics That Decide If You Scale or Bail

Deciding whether to pour more budget into Instagram ads or cut bait comes down to three cold, delightful numbers. Treat them like a traffic light: green = scale, yellow = iterate, red = stop. Below are the three metrics that give a clean thumbs-up or thumbs-down — plus precise guardrails so your next move is more poker face than panic.

Metric 1 — ROAS and CPA: Return on ad spend tells you if the math works. Rule of thumb: scale campaigns that deliver ROAS >= 3x or CPAs below your target acquisition cost; increase budget in modest steps (20–30% daily) and monitor performance. If a campaign fails to hit targets after a reliable test period (7–14 days or a statistically meaningful sample), pause and rework creative or audience.

Metric 2 — Engagement and funnel conversion: High click-through rates paired with weak landing-page conversions mean a funnel leak. If CTR is healthy (roughly > 1.5%) but landing conversion is low, do not scale until the page is fixed. Also monitor frequency — once ads hit frequency around 3–4 and performance drops, refresh creative. Low CTR is a creative problem; low conversion is a product or UX problem.

Metric 3 — LTV:CAC and retention: Short-term wins are cute; long-term customers pay the bills. Aim for an LTV to CAC ratio north of 3:1 before aggressive scaling. If LTV:CAC sits below about 1.5 after cohort analysis, stop and rethink pricing, funnels, or onboarding. Use cohort windows (30–90 days) and small budget tests to validate before pouring fuel on the fire.

Creative That Converts: Hooks, CTAs, and UGC That Actually Work

Stop the scroll in the first three seconds. A thumb stopping hook is not a cute caption, it is an ad lifeline; think of it as speed dating for attention. Open with a surprising visual, a pointed question, or a tiny action that promises a payoff.

Make the hook testable: Variant A = a human face with direct eye contact, B = product in motion, C = a perplexing statement that creates curiosity. Use a 2 to 3 word benefit overlay in bold so users know why to stay. Keep the intro tight on Reels and Stories—one to two seconds when possible.

CTAs that convert avoid generic commands. Swap “Learn More” for micro CTAs like See How, Get My Code, or Try 15s and link each to an explicit next step. Put a low friction CTA early and a clear conversion CTA near the end; test placement across carousels and story sequences.

UGC wins when it feels real. Brief creators to show a before, a quick demo, and one line about outcome; capture raw audio and add captions. Repurpose standout comments as on screen social proof and always request reuse permission so you can scale winning clips.

Treat creative as an engine: A/B three hooks, rotate CTAs weekly, and scale the UGC that lowers CPA. Run each variant for seven days, measure impact on conversion and LTV, then double down on the combinations that actually move the needle.

Targeting in 2026: What's Changed and What Still Prints Money

Privacy didn't kill targeting — it reshaped it into something smarter and slightly weirder. Third-party cookies and broad interest buckets are less reliable in 2026, so signals that actually mean something have taken center stage: first-party events (views, saves, add-to-cart), creative engagement (rewatches, sound-on rates), in-app commerce interactions and AR try-ons. The upside? These signals are stickier and much better predictors of conversion, so your audience slices can be smaller and louder instead of huge and silent.

Practical moves: stitch identity across touchpoints with a robust server-side conversion setup, prioritize high-value events (not just clicks), and let your bid strategy learn on events that correlate with lifetime value. Split audiences by intent and intent velocity — a “viewed twice in 48h” group will beat a cold interest bucket — and serve sequential creative that matches intent stage. Keep creative fresh: micro-personalized hooks, short Reels-first spots, and captions that invite comments will keep the platform's engagement machine feeding your targeting models.

Here are three audience plays that still print money when done right:

- 🆓 Free: warm retargeting (video viewers → story offers) for low-cost conversions and quick validation

- 🤖 Automated: AI lookalikes seeded with high-LTV customers and engagement signals, not just purchases

- 🔥 High-Intent: cart-abandon + checkout completers with sequential discounts and urgency creative

Measure more than click-throughs: watch ROAS by cohort, CAC trending for new vs nurtured buyers, and three-month retention to validate your "prints money" claim. Build experiments with tight hypotheses, run them long enough for the learning to stick, then scale winners aggressively while pruning leaky audiences. Targeting in 2026 is less about hacking reach and more about orchestrating small, profitable moments — and that's where Instagram ads still surprise people who thought the ROI fairy left town.

Budget Blueprint: Start Small, Learn Fast, Scale Smart

Think of your ad budget as an experimental lab, not a blank check. Start with a micro budget for each hypothesis — small spends let you test creative hooks, captions, and audiences without wrecking your CPA. Run each test for a compact window, typically 3–7 days, so signals are clear and you avoid premature scaling mistakes.

Design one clear KPI per test: CTR to validate creative, CPC to judge interest, CPA for bottom line. Keep tests clean by changing only one variable at a time. Allocate a tiny slice of daily spend to new ideas and the rest to proven performers, then let data decide the winners with a strict measurement cadence every 48–72 hours.

Apply simple decision rules so emotion does not steer the budget. If a variant beats your CPA target by 20 percent after the test window, increase its budget by 20–30 percent and monitor for 2–3 days. If a variant is underperforming and has twice the CPA target, pause it and reallocate those dollars to better performers or fresh experiments.

Use a tiered approach to manage risk and upside:

- 🆓 Free: dedicate minimal spend to content tests and organic boosts to collect baseline engagement metrics.

- 🐢 Slow: use conservative daily budgets to validate audiences before committing major funds.

- 🚀 Fast: scale winners incrementally with automated rules and close monitoring to maintain ROAS.

Budget discipline is a learning loop: test, measure, iterate, then scale. Treat early spend as research capital, document outcomes, and let the numbers guide enlargement decisions so growth is profitable, predictable, and fun.